Understanding Tokenization: The Basics Explained

Tokenization is a crucial process in the realm of digital transactions, transforming sensitive data into non-sensitive tokens. This method enhances security by allowing organizations to replace sensitive information, such as credit card numbers or personal identifiers, with unique tokens that can be used within a specific system without compromising the original data.

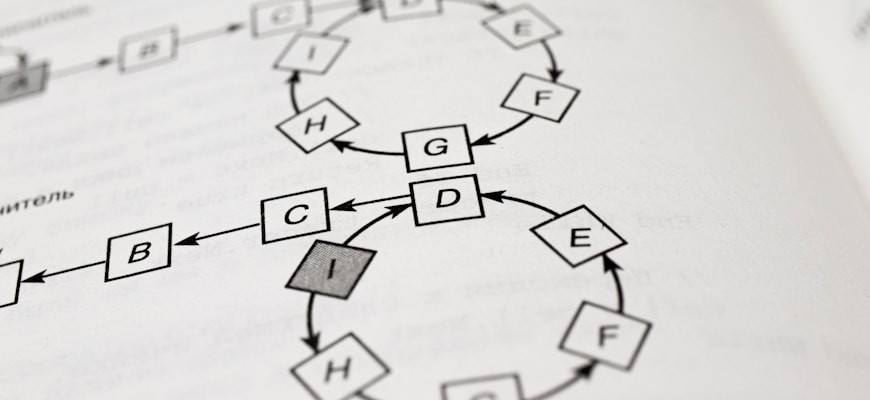

The tokenization process involves several key steps, each designed to ensure data protection while maintaining functionality. Understanding these steps is essential for businesses looking to implement tokenization effectively.

- Data Identification: The first step involves identifying sensitive information that requires protection. This could include personal data, financial details, or confidential business information.

- Token Generation: Once sensitive data is identified, unique tokens are generated. These tokens serve as placeholders for the original data, ensuring that the actual information is not stored in the system.

- Mapping Tokens: A secure mapping system is established to link tokens back to the original data. This mapping is typically stored in a secure database, accessible only to authorized personnel.

- Implementation: The generated tokens are integrated into existing systems and processes, allowing for seamless transactions without exposing sensitive data.

- Ongoing Management: Continuous monitoring and management of the tokenization system are essential to ensure security and compliance with regulations. Regular audits and updates help maintain the integrity of the tokenization process.

By following these steps, businesses can effectively implement tokenization, enhancing data security while facilitating secure transactions. Understanding tokenization not only protects sensitive information but also builds trust with customers, fostering a safer digital environment.

The Importance of Tokenization in Digital Transactions

Tokenization is a crucial process in modern digital transactions, enhancing security and privacy for users and organizations alike. By replacing sensitive data, such as credit card numbers or personal identification information, with unique tokens, the risk of data breaches significantly decreases. This method not only protects users’ sensitive information but also streamlines the transaction process.

In the realm of digital finance, the importance of tokenization cannot be overstated. As cyber threats continue to evolve, businesses must adopt stronger security measures. Tokenization serves as a robust defense mechanism, ensuring that even if a hacker intercepts transaction data, they will only obtain meaningless tokens rather than actual sensitive information. This fundamental shift in data handling helps build trust between consumers and businesses.

- Enhanced Security: By using tokens, organizations can safeguard sensitive information from potential breaches.

- Compliance with Regulations: Tokenization aids in meeting various compliance requirements, such as PCI DSS, which is vital for businesses handling payment information.

- Improved User Experience: Customers enjoy a seamless and secure transaction experience, encouraging repeat business and loyalty.

- Cost Efficiency: Reducing the risks associated with data breaches can lead to lower insurance premiums and fewer losses related to fraud.

Furthermore, the implementation of tokenization can be a game-changer in the rapidly evolving landscape of e-commerce. As more consumers shift towards online shopping, businesses must prioritize secure payment solutions. Tokenization not only protects customer data but also instills confidence in the digital transaction process, making it a vital component for any organization aiming to thrive in the digital marketplace.

In conclusion, the significance of tokenization in digital transactions cannot be neglected. Implementing this technology not only enhances security and compliance but also improves overall user experience. By embracing tokenization, businesses can safeguard sensitive information while fostering a secure environment for digital transactions.

Step 1: Identifying Assets for Tokenization

Identifying assets for tokenization is a crucial first step in the tokenization process. The aim is to determine which physical or digital assets can be converted into digital tokens on a blockchain. This process not only enhances liquidity but also allows for fractional ownership and easier transferability.

- Physical Assets: Real estate properties, art pieces, and collectibles can be tokenized. Each of these assets has intrinsic value and can attract a wide range of investors when represented as tokens.

- Digital Assets: Digital art, music, and intellectual property are prime candidates for tokenization. These assets can be easily represented on a blockchain, providing proof of ownership and authenticity.

- Financial Assets: Stocks, bonds, and commodities are also suitable for tokenization. By converting these financial instruments into tokens, they can be traded on various platforms, increasing accessibility for a broader audience.

- Utility Assets: Memberships, loyalty points, and other utility-based assets can benefit from tokenization. This approach enhances user engagement and creates a more dynamic ecosystem.

In summary, identifying the right assets for tokenization involves assessing the nature of the asset, its market demand, and the potential benefits of converting it into a digital token. This evaluation sets the foundation for a successful tokenization strategy, paving the way for greater innovation and investment opportunities.

Step 2: Choosing the Right Blockchain Technology

Choosing the right blockchain technology is crucial for the tokenization process. Various blockchain platforms offer unique features that can significantly impact the functionality and success of the tokens. Factors such as scalability, security, and transaction speed must be considered when selecting the appropriate blockchain for tokenization.

- Ethereum: Known for its robust smart contract capabilities, Ethereum is a popular choice for tokenization. Its extensive developer community and wide adoption make it a favorable option for creating decentralized applications and tokens.

- Binance Smart Chain: This blockchain provides lower transaction fees and faster processing times compared to Ethereum, making it an attractive option for projects focused on cost-efficiency and speed.

- Solana: With its high throughput and scalability, Solana is designed for applications requiring fast transactions. It is an excellent choice for projects anticipating high user activity and engagement.

- Polygon: An Ethereum scaling solution, Polygon enhances transaction speeds and reduces costs while maintaining compatibility with Ethereum’s ecosystem. This makes it suitable for developers looking to leverage Ethereum’s network while improving performance.

- Tezos: The self-amending blockchain Tezos offers on-chain governance, allowing token holders to vote on protocol upgrades. This feature can be beneficial for projects aiming for community involvement and sustainability.

Each blockchain technology presents different advantages and disadvantages, which can influence the tokenization strategy. Developers should evaluate the specific use case and target audience to determine the most suitable blockchain for their tokenization needs.

Furthermore, the choice of blockchain should align with the overall goals of the project. By understanding the unique characteristics of each platform, businesses can better navigate the complexities of the tokenization process and ensure a successful deployment of their tokens.

Step 3: Creating and Issuing Tokens

Creating and issuing tokens is a pivotal step in the tokenization process, transforming digital assets into blockchain-compatible tokens. This phase involves several critical actions that ensure the successful launch and management of tokens.

- Define Token Standards: Choosing the appropriate token standard, such as ERC-20 or ERC-721, is essential. These standards dictate how tokens behave on the blockchain and their interoperability with various platforms.

- Token Design: The design of the token includes its name, symbol, total supply, and divisibility. A well-thought-out design can enhance user adoption and marketability.

- Smart Contract Development: Smart contracts are the backbone of token functionality. They automate processes, enforce rules, and facilitate transactions without intermediaries.

- Testing and Auditing: Rigorous testing and third-party auditing of the smart contract are crucial to identify vulnerabilities and ensure security, thus building trust among potential users.

- Token Minting: Once the smart contract is finalized, the process of minting tokens can commence. This step involves generating the actual tokens that will be distributed to users.

- Token Distribution: After minting, tokens need to be distributed to investors, users, or stakeholders, typically through Initial Coin Offerings (ICOs) or airdrops.

By following these steps, the tokenization process not only creates a digital representation of assets but also opens avenues for liquidity and broader market participation. The successful issuance of tokens contributes to the overall value proposition of the project, driving interest and investment in the token ecosystem.

Step 4: Ensuring Compliance and Security in Tokenization

Ensuring compliance and security in the tokenization process is a critical aspect that cannot be overlooked. Organizations must adhere to various regulations and standards to protect sensitive data during tokenization. This involves implementing robust security measures and maintaining compliance with industry requirements.

- Identify relevant regulations: Understanding the legal landscape surrounding data protection and tokenization is essential. Regulations such as GDPR, PCI DSS, and others play a significant role in shaping tokenization practices.

- Implement strong encryption: Using advanced encryption techniques ensures that tokenized data remains secure. This step protects sensitive information from unauthorized access and potential breaches.

- Regular audits and assessments: Conducting periodic audits helps organizations assess their tokenization practices and ensure compliance with applicable standards. Regular assessments can identify vulnerabilities and areas for improvement.

- Establish access controls: Limiting access to tokenization systems is crucial. Implementing role-based access controls ensures that only authorized personnel can interact with sensitive data and tokenization processes.

- Monitor and respond to threats: Continuous monitoring of tokenization systems allows for the rapid detection of potential security threats. Organizations should have incident response plans in place to address any security breaches promptly.

By focusing on compliance and security, organizations can enhance their tokenization efforts while maintaining the integrity of sensitive information. A well-structured approach to compliance not only safeguards data but also fosters trust among customers and partners, ensuring a successful tokenization strategy.

I was always curious about how tokenization works in real estate. This article provided a clear step-by-step breakdown that helped me understand the process better. Looking forward to learning more about this innovative technology!

As someone who is passionate about both cryptocurrencies and real estate, I found this article to be incredibly informative. Can you explain how tokenization can benefit smaller investors looking to enter the real estate market?

Tokenization has always seemed like a complex concept to me, but this article broke it down in a way that was easy to understand. I appreciate the detailed explanation and examples provided. Great job!